𝐌𝐚𝐧𝐲 𝐥𝐞𝐚𝐝𝐞𝐫𝐬 𝐞𝐧𝐯𝐢𝐬𝐢𝐨𝐧 𝐚𝐫𝐭𝐢𝐟𝐢𝐜𝐢𝐚𝐥 𝐢𝐧𝐭𝐞𝐥𝐥𝐢𝐠𝐞𝐧𝐜𝐞 (𝐀𝐈) 𝐚𝐬 𝐚 𝐭𝐫𝐚𝐧𝐬𝐟𝐨𝐫𝐦𝐚𝐭𝐢𝐯𝐞 𝐟𝐨𝐫𝐜𝐞 𝐟𝐨𝐫 𝐭𝐡𝐞 𝐏𝐡𝐢𝐥𝐢𝐩𝐩𝐢𝐧𝐞 𝐰𝐨𝐫𝐤𝐟𝐨𝐫𝐜𝐞 𝐚𝐧𝐝 𝐞𝐜𝐨𝐧𝐨𝐦𝐲—𝐮𝐧𝐥𝐨𝐜𝐤𝐢𝐧𝐠 𝐧𝐞𝐰 𝐨𝐩𝐩𝐨𝐫𝐭𝐮𝐧𝐢𝐭𝐢𝐞𝐬, 𝐚𝐜𝐜𝐞𝐥𝐞𝐫𝐚𝐭𝐢𝐧𝐠 𝐢𝐧𝐧𝐨𝐯𝐚𝐭𝐢𝐨𝐧, 𝐚𝐧𝐝 𝐞𝐧𝐡𝐚𝐧𝐜𝐢𝐧𝐠 𝐧𝐚𝐭𝐢𝐨𝐧𝐚𝐥 𝐜𝐨𝐦𝐩𝐞𝐭𝐢𝐭𝐢𝐯𝐞𝐧𝐞𝐬𝐬. 𝐘𝐞𝐭, 𝐯𝐢𝐬𝐢𝐨𝐧 𝐚𝐥𝐨𝐧𝐞 𝐢𝐬 𝐧𝐨𝐭 𝐞𝐧𝐨𝐮𝐠𝐡. 𝐓𝐨 𝐭𝐫𝐮𝐥𝐲 𝐡𝐚𝐫𝐧𝐞𝐬𝐬 𝐭𝐡𝐞 𝐩𝐨𝐭𝐞𝐧𝐭𝐢𝐚𝐥 𝐨𝐟 𝐀𝐈, 𝐭𝐡𝐞 𝐏𝐡𝐢𝐥𝐢𝐩𝐩𝐢𝐧𝐞𝐬 𝐦𝐮𝐬𝐭 𝐮𝐫𝐠𝐞𝐧𝐭𝐥𝐲 𝐞𝐬𝐭𝐚𝐛𝐥𝐢𝐬𝐡 𝐚 𝐜𝐨𝐦𝐩𝐫𝐞𝐡𝐞𝐧𝐬𝐢𝐯𝐞 𝐠𝐨𝐯𝐞𝐫𝐧𝐚𝐧𝐜𝐞 𝐟𝐫𝐚𝐦𝐞𝐰𝐨𝐫𝐤—𝐚 𝐬𝐭𝐫𝐚𝐭𝐞𝐠𝐢𝐜 𝐭𝐨𝐨𝐥𝐤𝐢𝐭 𝐭𝐡𝐚𝐭 𝐞𝐧𝐬𝐮𝐫𝐞𝐬 𝐭𝐡𝐞 𝐫𝐞𝐬𝐩𝐨𝐧𝐬𝐢𝐛𝐥𝐞 𝐝𝐞𝐬𝐢𝐠𝐧, 𝐝𝐞𝐯𝐞𝐥𝐨𝐩𝐦𝐞𝐧𝐭, 𝐚𝐧𝐝 𝐝𝐞𝐩𝐥𝐨𝐲𝐦𝐞𝐧𝐭 𝐨𝐟 𝐀𝐈 𝐬𝐲𝐬𝐭𝐞𝐦𝐬 𝐢𝐧 𝐬𝐞𝐫𝐯𝐢𝐜𝐞 𝐨𝐟 𝐨𝐮𝐫 𝐩𝐞𝐨𝐩𝐥𝐞.

The Philippines has many unique advantages: a globally competitive, English-speaking talent pool with strong digital skills. However, there is an urgent need to prepare for AI’s transformative impact on jobs and productivity. By investing in upskilling, reskilling, and digital education, the Philippines can not only safeguard current employment but also unlock new opportunities in emerging industries driven by AI. The emphasis is on making the country AI-ready through collaborative action—across government, industry, and academia—to ensure that digital transformation is inclusive, equitable, and future-proof.

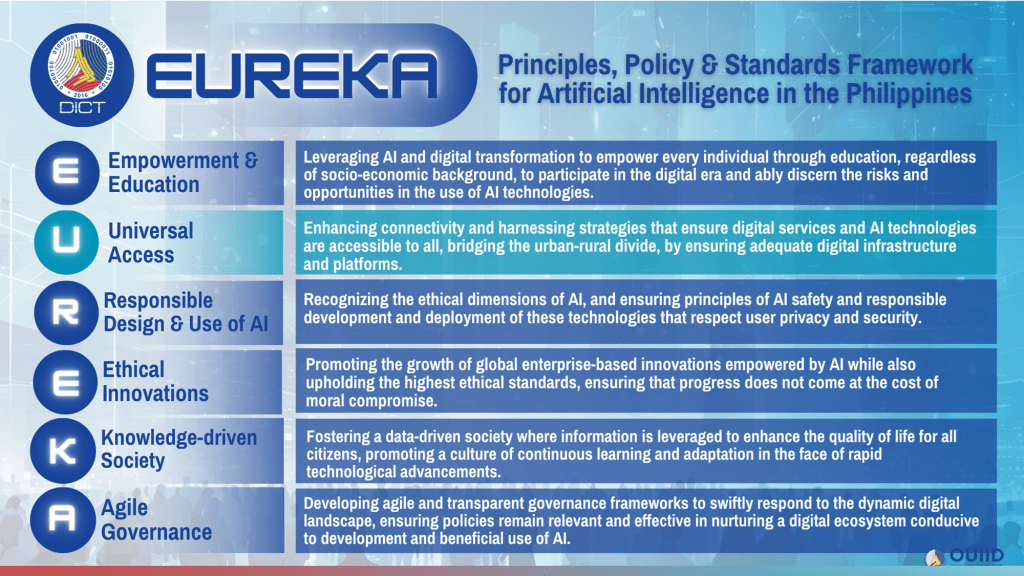

EUREKA FRAMEWORK FOR POLICY DISCUSSION

As Department of Information and Communications Technology Undersecretary overseeing policy and industry – I introduced the EUREKA Framework for Policy Discussion in 2023, which stands for Empowerment & Education, Universal Access, Responsible Design & Use of AI, Ethical Innovations, Knowledge-driven Society, and Agile Governance. This framework aims to serve as a discussion outline to design and consolidate the Principles, Policy, and Standards Foundation for Artificial Intelligence in the Philippines, aiming to ensure that AI becomes a tool for inclusive, ethical, and sustainable national development.

At its core, Empowerment & Education emphasizes using AI and digital transformation to uplift every Filipino, regardless of socio-economic background, through education. This enables citizens to actively participate in the digital era while critically evaluating the risks and opportunities of AI. Universal Access focuses on digital inclusion by promoting infrastructure and connectivity that bridge the urban-rural divide, ensuring AI tools and platforms are available to all.

The framework advocates for the Responsible Design & Use of AI, stressing the importance of ethics, safety, and privacy in AI development and deployment. Ethical Innovations promote AI-driven enterprise growth while maintaining moral integrity, ensuring technological progress aligns with societal values. It then envisions a Knowledge-driven Society where data is used to enhance life quality, foster learning, and adapt to fast-paced technological changes. Finally, Agile Governance underscores the need for transparent, responsive policymaking that keeps up with the evolving digital landscape and ensures AI serves public interest.

Our mandate at the Department of Information and Communications Technology (DICT) to develop and implement national ICT policies is grounded in Republic Act No. 10844, which created the DICT. This legislation empowers the department to “formulate, recommend, and implement national policies, plans, and programs that will promote the development and use of ICT in the country.” In the context of emerging technologies like AI, this mandate is both a responsibility and a call to action—to ensure that AI is governed with foresight, aligned with national priorities, and guided by principles that uphold human dignity, innovation, and inclusive growth.

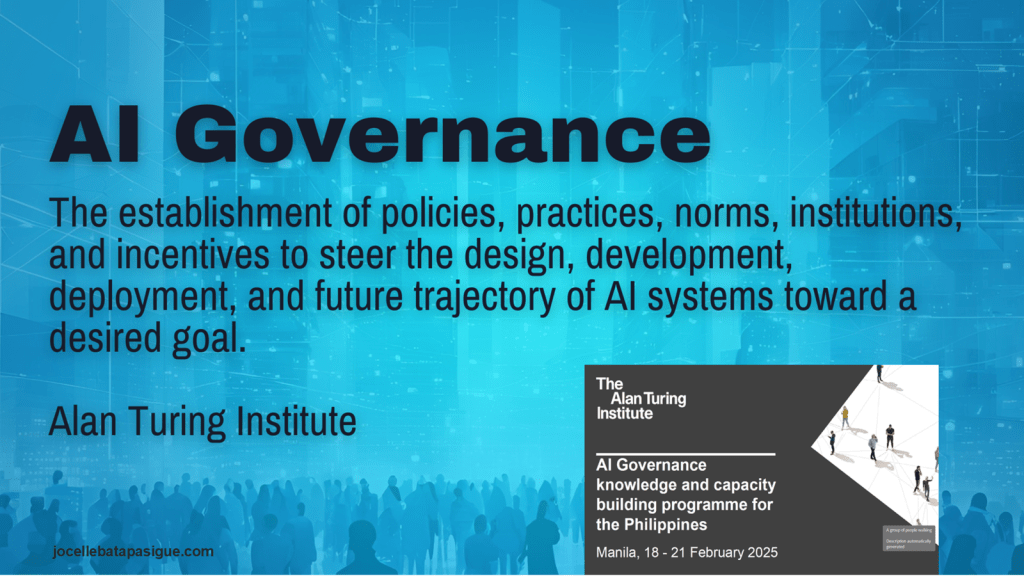

We need to seriously learn the concept of AI Governance as defined by the Alan Turing Institute, emphasizing the critical role of establishing robust policies, norms, institutions, and incentives to guide the design, deployment, and evolution of artificial intelligence systems. AI governance ensures that the trajectory of AI aligns with societal goals and ethical standards, safeguarding public interest while fostering innovation. We are fortunate to be part of the Alan Turing Institute’s AI Governance Knowledge and Capacity Building Programme in the Philippines last February 18–21, 2025, for national stakeholders to collaboratively shape the future of AI in the country.

Effective AI governance must balance innovation with ethical safeguards—prioritizing human dignity, digital inclusion, and public trust. It is especially critical in the Philippine context, where inclusive and community-driven policymaking can ensure that AI serves to uplift rural areas, empower local talent, and reinforce democratic institutions. A concrete AI Governance represents a step forward in nurturing a future-ready, fair, and digitally resilient Philippines.

There is a critical need for a comprehensive Artificial Intelligence (AI) Governance Policy Strategy in the Philippines. As AI technologies rapidly evolve, they bring both transformative opportunities and significant risks. The rationale behind developing such a policy lies in the urgency to implement proactive governance that can mitigate harms while still encouraging innovation and competitiveness. The slide emphasizes that AI systems affect national security, economic vitality, and the protection of human rights—making governance not just a technical concern but a societal imperative.

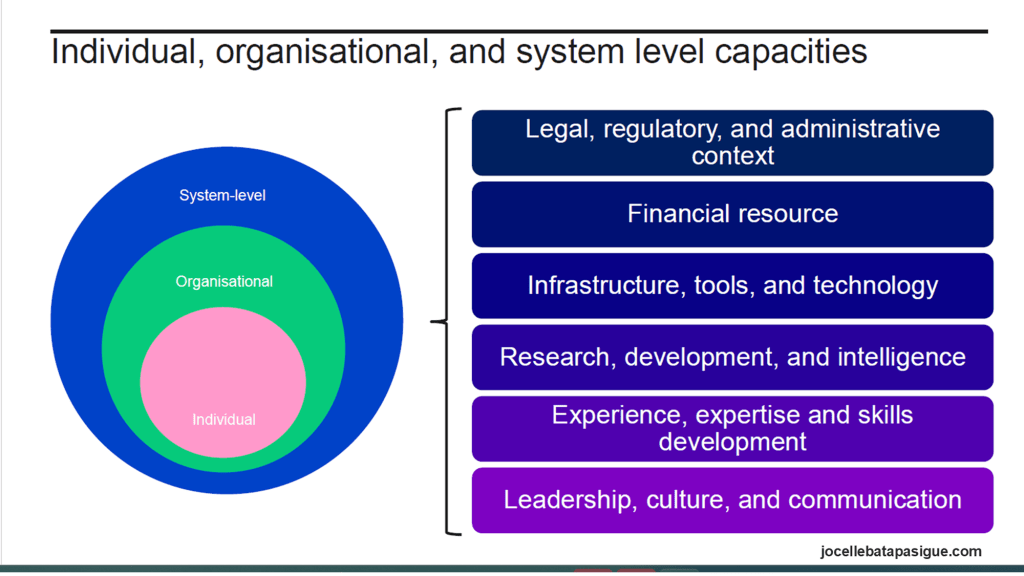

We see the importance of multi-layered approach to AI capacity-building at the individual, organizational, and system levels—each layer essential for a comprehensive and resilient digital governance framework. At the core is the individual, who must be equipped with relevant experience, expertise, and skills to engage meaningfully in AI development and deployment. We need to assure that worl that we have organizational capacities, which include effective leadership, culture, and communication practices that foster innovation and collaboration across teams and sectors.

The system-level represents the broader ecosystem that must be shaped by robust legal, regulatory, and administrative frameworks, sustained financial resources, reliable infrastructure and technologies, and strong research and development ecosystems. These interconnected components create the enabling environment necessary for effective AI governance and innovation.

Building AI capacity is not just about technology—it is about people, institutions, and systems working in synergy. For the Philippines, this model reinforces the need to invest in digital upskilling, inclusive leadership, and policy coherence across local and national levels, particularly to empower rural communities and future-proof the Filipino workforce in the age of intelligent systems.

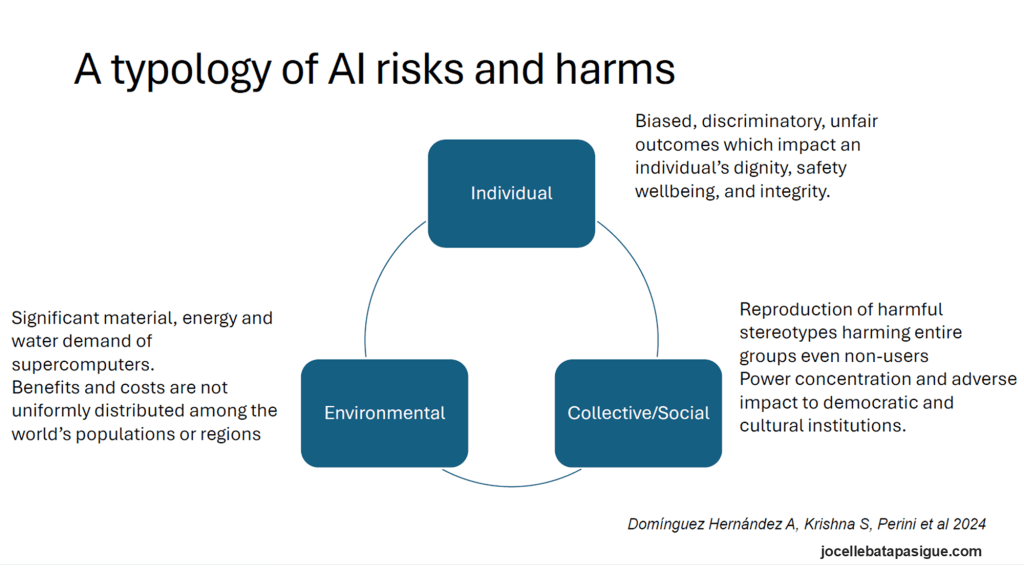

We also need examine the typology of AI risks and harms, categorizing the negative impacts of artificial intelligence into three interconnected domains: Individual, Collective or Social, and Environmental.

At the individual level, AI systems can generate biased, discriminatory, or unfair outcomes that directly compromise a person’s dignity, safety, well-being, and integrity. This includes cases where automated decisions affect access to healthcare, education, employment, or justice—particularly harming vulnerable populations.

On a collective or social scale, AI can reproduce harmful stereotypes, impacting entire communities, including those not directly interacting with the system. Moreover, it can concentrate power in the hands of a few tech entities or states, undermining democratic values, cultural integrity, and civic participation.

At the environmental level, AI’s development demands significant resources—materials, energy, and water, particularly in training large models using supercomputers. These costs are not shared equally, exacerbating global inequities between regions and populations that may not even benefit from AI innovations.

Understanding these multi-dimensional risks is essential for building ethical, inclusive, and resilient AI governance in the Philippines. This typology reinforces the need for community-informed policymaking, ethical design, and climate-conscious digital innovation, especially in the context of countryside development and youth empowerment. By addressing these harms comprehensively, we can ensure that AI serves as a force for equity, sustainability, and democratic integrity.

Through the Alan Turing Institute training – we examined as policy makers the AI Project Lifecycle, a comprehensive 12-step framework that guides the creation and implementation of artificial intelligence systems. It is divided into three major phases: Design, Development, and Deployment.

The Design phase starts with Project Planning (1), setting objectives and timelines, followed by Problem Formulation (2) to define the AI problem clearly. Next comes Data Extraction or Procurement (3) to gather relevant data, and Data Analysis (4) to understand and prepare the dataset. It proceeds to Preprocessing & Feature Engineering (5), where raw data is cleaned and refined, and then to Model Selection & Training (6), where appropriate AI models are trained.

The Development phase continues with Model Testing & Validation (7) to evaluate performance, and Model Reporting (8) to document outcomes and ensure transparency. Then, in the Deployment phase, the AI solution is brought to life through System Implementation (9) and User Training (10). To ensure effectiveness and safety, there is System Use & Monitoring (11), and finally, Model Updating or Deprovisioning (12), where the model is either refined or retired as needed.

Understanding the AI lifecycle is crucial for inclusive and ethical AI deployment. Each phase offers touchpoints for governance, transparency, capacity-building, and risk mitigation—especially vital in a Philippine context where digital readiness must extend to communities, MSMEs, and local governments. Responsible AI development means involving all stakeholders at every stage to ensure human-centric, inclusive outcomes.

As leaders, we should promote and ensure the so-called Accountability-by-Design, a foundational principle in ethical AI development. It asserts that all AI systems must be architected to support end-to-end answerability and auditability—meaning that every decision and outcome produced by the system should be traceable and reviewable. Central to this principle is the involvement of “humans-in-the-loop” throughout the entire design and implementation process. These human actors are essential for ensuring that the AI behaves as intended, aligns with ethical norms, and is responsive to real-world concerns.

Additionally, activity monitoring protocols must be embedded into AI systems to ensure continuous oversight, allowing for proactive identification of issues, errors, or unintended consequences. This is especially critical in high-stakes applications like healthcare, public safety, education, and government services.

Accountability-by-Design is not optional—it is a non-negotiable requirement for inclusive, rights-respecting AI in the Philippines. It ensures that technology does not operate in isolation from the people and values it is meant to serve. Embedding accountability safeguards the public trust, protects citizens from harm, and reinforces our advocacy for transparent, human-centered digital governance across all sectors.

Again at the Alan Turing Institute Training, we delved into the six foundational principles essential for building responsible and trustworthy artificial intelligence systems. These guiding values—Sustainability, Safety, Accountability, Fairness, Explainability, and Data Stewardship—represent the ethical compass of AI governance and design.

Sustainability emphasizes that AI projects must be developed with ongoing sensitivity to their real-world social, environmental, and economic impacts. Safety requires AI systems to be accurate, robust, secure, and technically reliable, safeguarding against failures and vulnerabilities. Accountability ensures that AI projects are transparent and auditable, making all decisions and actions traceable to responsible parties. Fairness demands active mitigation of biases and safeguards against discriminatory outcomes, particularly critical in diverse societies like the Philippines. Explainability focuses on the clarity and interpretability of AI decisions, enabling users and stakeholders to understand and trust system outputs. Finally, Data Stewardship centers on maintaining high standards for data quality, integrity, privacy, and protection throughout the AI lifecycle.

I fully uphold these principles as cornerstones of ethical and inclusive digital transformation. By embedding these principles in every stage of AI development, we not only ensure compliance and safety but also uphold human dignity and societal well-being in our digital future.

As many say, there is no “silver bullet” or one-size-fits-all solution for AI governance. Instead, governing AI systems requires a diverse toolkit of strategies and frameworks tailored to the specific context in which these technologies operate. This means that a combination of legal, ethical, technical, and procedural tools must be applied, depending on the particular use case and societal needs.

Importantly, it emphasizes that AI governance must be context-sensitive, reflecting the unique cultural, economic, and geographic realities of a country or community. For example, what works in an advanced urban tech hub may not be suitable for rural provinces in the Philippines, where access to infrastructure and digital literacy levels may differ.

Finally, we have to always remember that governance is not static—it must involve continuous monitoring, learning, and re-evaluation. AI evolves rapidly, and so too must the policies, safeguards, and accountability mechanisms that govern it. I advocate for a dynamic and inclusive governance model, rooted in local realities and responsive to change, to ensure that AI serves the people—especially the underserved—with dignity, fairness, and sustainability.

It is important for us as policymakers to understand the essential tools of AI governance: principles and frameworks, assurance and standards, and legislation and regulation—each playing a critical role in building responsible and accountable AI ecosystems.

First, Principles & Frameworks provide the overarching values and norms that guide ethical AI development. These may include transparency, fairness, human rights, and sustainability—foundational to ensuring that AI benefits all sectors of society, especially vulnerable populations.

Second, Assurance & Standards define the roles, responsibilities, processes, and benchmarks that ensure accountability. This involves operationalizing AI governance by setting clear expectations for actors across the ecosystem—from developers and policymakers to civil society and end-users. It ensures consistency, safety, and quality through enforceable best practices.

Third, Legislation & Regulation establishes the rights and obligations that protect individuals and communities. It ensures there are legal safeguards in place—such as privacy rights, redress mechanisms, and anti-discrimination laws—to address AI-related harms and build trust.

These tools must not operate in isolation. Effective AI governance requires a harmonized approach, shaped by local context and anchored in inclusive, participatory policymaking. In the Philippines, integrating these tools ensures that AI advances not just innovation, but social justice, digital inclusion, and public trust—especially for the countryside and youth sectors we are dedicated to uplifting.

ROLE OF AI PRINCIPLES AND FRAMEWORKS

Principles and frameworks in AI governance are typically voluntary, non-binding documents that articulate the core values and ethical norms essential for the responsible development and use of artificial intelligence. Rather than enforceable laws, these principles serve as guiding foundations that inform how AI should be created and deployed with a focus on human rights, fairness, transparency, and accountability.

Such frameworks often emerge from intergovernmental and multilateral collaborations, reflecting shared commitments across nations. They help harmonize global understandings of ethical AI and are commonly adopted by governments, institutions, and industries as a moral compass to steer their AI strategies.

Though non-binding, these documents can influence political priorities and shape national legislation, regulatory actions, and institutional behavior. They help “set the tone” for how AI is governed, especially in emerging economies like the Philippines, where policy development must align with global standards while remaining attuned to local socio-economic and cultural realities.

These frameworks be contextualized for inclusive digital transformation—ensuring that rural communities, youth leaders, and marginalized voices are part of shaping how AI technologies are governed and applied. These principles, while soft in nature, are powerful tools for shaping ethical, inclusive, and future-ready digital ecosystems.

IMPORTANCE OF ASSURANCE AND STANDARDS IN THE GOVERNANCE OF AI SYSTEMS

Assurance refers to the process of building confidence and ‘justified trust’ in AI technologies through independent third-party evaluations. It serves as a critical trust mechanism to ensure that AI systems are not only technically sound but also ethically aligned and safe for public use.

Standards, on the other hand, are voluntary guidelines that define expert-agreed best practices on how AI should be developed, tested, and implemented. These standards reflect the consensus of technical experts, often developed within professional or international committees, and ensure consistency and quality across the entire AI lifecycle—from design and deployment to monitoring and decommissioning.

Crucially, these standards integrate ethical principles and accountability into technical processes, promoting responsible innovation. They are not legal mandates but function as essential tools to guide industry and government toward more trustworthy and transparent AI systems.

In the Philippine context, especially in countryside and youth-focused initiatives, such standards are invaluable in protecting public interest, ensuring inclusive access, and fostering locally grounded, globally aligned AI development. Assurance mechanisms strengthen public trust and are key to building a safe, human-centered digital ecosystem.

ROLE OF LEGISLATION AND REGULATION AS A CORE PILLAR OF AI GOVERNANCE

Legislation and regulation are mechanisms and binding rules that regulated entities must follow, alongside non-binding recommendations that offer guidance for responsible behavior. Together, they create a structured environment where AI development and deployment can occur safely and ethically.

Laws and regulations are essential because they define the rights and entitlements of individuals and communities affected by AI, while also establishing the obligations of developers, deployers, and decision-makers. These legal tools ensure that actors in the AI ecosystem are accountable to society, especially in areas such as data privacy, algorithmic transparency, and non-discrimination.

Crucially, these rules are enacted and enforced by public authorities—including legislatures, government agencies, and regulatory bodies—whose role is to uphold the public interest. In the Philippine context, this includes not just compliance but the promotion of equity, inclusion, and national stability, particularly for rural populations, micro-entrepreneurs, and the youth.

We need new laws that are not only technical in nature but also socially grounded—protecting human dignity, bridging digital divides, and ensuring that AI serves as a force for inclusive, sustainable development. Strong legal frameworks are indispensable in building a trustworthy digital future for all Filipinos.

There are already several key international frameworks that guide the responsible governance of artificial intelligence. These documents represent the shared ethical values, principles, and regulatory goals of nations and institutions working to ensure AI systems benefit humanity while mitigating risks and harms.

The OECD AI Principles (2019, updated 2024) are among the earliest globally endorsed frameworks, emphasizing human-centered values, transparency, and accountability. The UNESCO Recommendation on the Ethics of AI (2021) takes a broader, inclusive approach by promoting universal human rights, cultural diversity, and sustainable development through AI. The ASEAN Guide on AI Governance and Ethics (2024) reflects regional perspectives and priorities in Southeast Asia, underscoring contextual relevance and collaboration among member states.

The Hiroshima AI Principles and Code of Conduct (2023) aim to align AI development with peace, democracy, and stability, while the Bletchley and Seoul Declarations (2024) address emerging risks such as frontier AI and promote international cooperation for safety and governance.

These frameworks are vital references for shaping local and national AI policies in the Philippines, especially in advocating for ethics, inclusion, transparency, and rural digital empowerment. They serve as global guideposts that can be adapted to Filipino realities, helping us ensure AI upholds human dignity, supports democratic values, and bridges—not deepens—inequality.

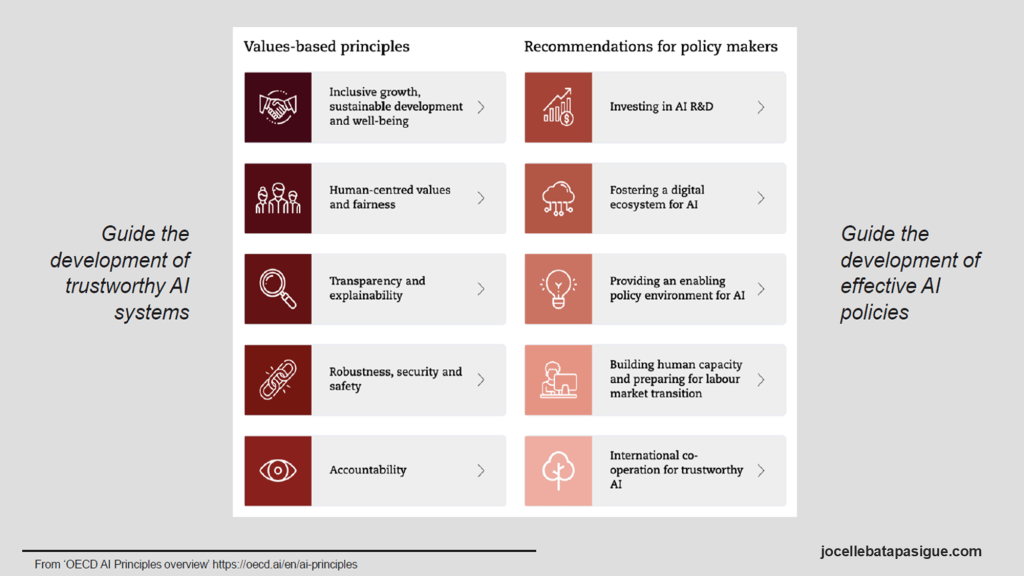

The OECD AI Principles, offer a dual framework for both building trustworthy AI systems and shaping effective AI policies. It outlines values-based principles that guide ethical AI development: ensuring inclusive growth and sustainability, respecting human-centered values and fairness, promoting transparency and explainability, maintaining robustness, security, and safety, and embedding accountability throughout the AI lifecycle. These principles are essential for ensuring AI systems serve the common good, particularly in diverse and developing societies.

Policy recommendations for governments and decision-makers include investing in AI research and development (R&D), fostering a digital ecosystem that supports innovation, and creating a policy environment conducive to responsible AI. Moreover, it emphasizes the need to build human capacity for navigating AI’s impact on the labor market and to enhance international cooperation for aligned global standards and trust-building.

This framework reflects my advocacy for ethical and inclusive digital transformation in the Philippines. These principles and recommendations should guide national AI strategies to ensure that technological growth not only uplifts innovation but also prioritizes digital inclusion, rural empowerment, and the protection of human dignity. By grounding AI governance in shared values and forward-looking policy, we build a future-ready, fair, and resilient digital society.

The principles of the UNESCO Recommendation on the Ethics of Artificial Intelligence is a globally recognized framework adopted in 2021 to guide nations in the responsible and inclusive development of AI. These principles establish a human-centered foundation for AI that prioritizes ethics, human rights, and global equity.

Key principles include Do No Harm, underscoring the importance of preventing negative consequences in AI deployment; Safety and Security, to ensure systems are robust and trustworthy; and Fairness and Non-discrimination, aiming to eliminate bias and uphold social justice. The recommendation promotes Sustainability, aligning AI with environmental and societal well-being, and affirms the Right to Privacy and Data Protection, protecting citizens in the digital age.

Crucially, the framework demands Human Oversight and Determination, ensuring humans remain in control, and insists on Transparency and Explainability, so systems can be understood and held accountable. It also advocates for Responsibility and Accountability, Awareness and Literacy, and Multi-stakeholder Governance, emphasizing the need for inclusive dialogue among governments, civil society, academia, and industry.

These principles are especially vital for the Philippines. They align with our advocacy for digital empowerment with dignity, ethical innovation, and inclusive governance. By adopting and contextualizing these principles, we can shape an AI future that respects Filipino values, uplifts the countryside, and ensures technology serves humanity, not the other way around.

The guiding principles of the ASEAN Guide on AI Governance and Ethics provides a regionally grounded framework for Southeast Asian countries to promote safe, inclusive, and ethical AI. The framework reflects ASEAN’s commitment to shaping AI in a way that supports economic growth, human dignity, and social trust across diverse cultural and political contexts.

Key principles include Transparency and Explainability, ensuring AI decisions can be understood and scrutinized, and Fairness and Equity, which aim to prevent bias and protect vulnerable populations. Security and Safety and Robustness and Reliability emphasize the technical soundness of AI systems, ensuring they function correctly and are resilient to misuse or failure.

Equally important are Human-Centricity, which places people—not machines—at the heart of innovation; Privacy and Data Governance, safeguarding individuals’ rights over their personal data; and Accountability and Integrity, ensuring responsible oversight and adherence to ethical standards throughout the AI lifecycle.

I view this good regional guide as a vital tool for the Philippines, reinforcing our national values of inclusivity, digital empowerment, and ethical innovation. By aligning with ASEAN’s principles, we can develop AI systems that are not only competitive but also culturally respectful, socially just, and resilient—particularly beneficial for our youth, countryside innovators, and grassroots digital leaders.

Here are key recommendations from the ASEAN Guide on AI Governance and Ethics specifically aimed at policymakers across Southeast Asia. It highlights the strategic actions needed to build inclusive, ethical, and sustainable AI ecosystems in the region.

First, it emphasizes the need for national investment in talent, start-ups, and AI research and development. This calls for governments to support local innovation ecosystems, promote digital entrepreneurship, and nurture AI skills—especially among the youth and in underserved communities. Such investment ensures regional competitiveness while addressing development gaps.

Second, it advocates for raising public awareness about the societal impact of AI. This includes fostering critical understanding among citizens of how AI shapes jobs, rights, information access, and democracy. Public education is essential for empowering people to engage with AI technologies meaningfully and safely.

Lastly, the slide encourages regional cooperation by establishing a working group on AI governance. This group would enable ASEAN member states to share knowledge, align standards, and respond collectively to emerging risks—ensuring that AI development respects local contexts while upholding shared ethical values.

These recommendations are highly relevant to the Philippine digital transformation agenda. They echo our mission to invest in countryside innovation, youth empowerment, and ethical policy design, and to take an active role in shaping ASEAN’s inclusive digital future through collaboration, creativity, and service-oriented leadership.

The Hiroshima AI Process (HAIP) is a global initiative launched during Japan’s G7 presidency in 2023, aimed at setting ethical and operational guardrails for advanced AI systems, including foundation models and generative AI. As powerful AI technologies evolve rapidly, HAIP serves as a coordinated effort to ensure their development and use remain aligned with shared democratic values, human rights, and global safety.

Central to HAIP is a Code of Conduct, which provides clear guidance on responsible practices for AI developers and users. It is structured around 11 guiding principles and follows a risk-based approach, recognizing that not all AI applications carry the same level of risk and thus require tailored governance strategies.

Importantly, HAIP respects sovereignty and contextual diversity, allowing individual jurisdictions to determine how to implement these guidelines. This flexibility ensures that countries can adapt the framework to their own legal, cultural, and socio-economic conditions.

The Hiroshima AI Process as a crucial milestone in the global movement for ethical AI governance, one that the Philippines must actively engage with. By aligning with such multilateral frameworks, we can strengthen our local policies, empower our digital workforce, and uphold ethical standards—especially as we advance countryside innovation, youth AI literacy, and inclusive digital transformation.

The Bletchley Declaration by Countries Attending the AI Safety Summit, 1-2 November 2023

The Bletchley Declaration (2023), a landmark agreement signed by 28 countries during the UK’s AI Safety Summit, including major global players such as the European Union, China, and the Philippines. This declaration marks a critical step in international cooperation to address the complex and evolving risks associated with “frontier” AI systems—highly advanced technologies like generative AI and large foundation models.

The declaration expresses a strong commitment to collaborative efforts in identifying, assessing, and mitigating AI safety risks. It underscores the importance of proactive, science-based policymaking, driven by shared research and evidence-based dialogue across borders. This includes working together to establish norms, safety testing mechanisms, and regulatory approaches for high-risk AI applications that could affect global security and stability.

I celebrate the Philippines’ participation in this global initiative. It reflects our country’s growing role in shaping a safe, ethical, and inclusive digital future. The Bletchley Declaration aligns with my advocacy for science-driven governance, human dignity, and international solidarity—especially as we empower our youth, countryside innovators, and policy leaders to contribute to the global AI discourse while grounding our actions in Filipino values and needs.

Seoul Declaration of the AI Seoul Summit for Safe, Innovative, and Inclusive AI

The Seoul Declaration (2024) is a global agreement that builds on the momentum of the Bletchley Declaration (2023) and deepens international cooperation around AI safety and governance. Announced at the AI Seoul Summit, this declaration reflects a growing global consensus on the need for science-based, interoperable, and collaborative frameworks to manage the risks of advanced AI systems.

One of its most significant contributions is the commitment to create an international network of AI safety institutes, fostering global collaboration on AI safety science. This network aims to generate shared knowledge, develop testing methodologies, and monitor risks in real time across jurisdictions.

The declaration also promotes the interoperability of AI governance frameworks, encouraging alignment across countries while respecting national sovereignty. This helps ensure that AI systems developed in one part of the world remain safe, fair, and compliant when used elsewhere.

Additionally, the declaration introduces an Interim International Scientific Report on the Safety of Advanced AI, chaired by renowned expert Yoshua Bengio, emphasizing evidence-based policymaking as a foundation for trust and innovation.

This declaration as vital to shaping a resilient and ethical digital future for the Philippines and ASEAN. It aligns with our core advocacies—ethical AI, inclusive policymaking, and the development of local capacity to contribute meaningfully to global standards. By participating in such frameworks, we uplift Filipino voices, protect human dignity, and empower communities from the countryside to the capital to benefit from AI in a safe and just manner.

AI Safety Institutes

We already have many AI Safety Institutes, which key mechanisms emerging from the Bletchley and Seoul Declarations to ensure global collaboration in scientific research on AI safety. These institutes aim to develop, evaluate, and apply research-based frameworks that help monitor and guide the development of advanced AI technologies responsibly.

The role of these institutes centers on AI evaluation—systematically assessing risks associated with AI systems, particularly foundation and generative models—and translating scientific findings into practical regulatory and governance tools. Their objective is to ensure that AI development aligns with safety, ethical standards, and societal well-being.

To date, AI Safety Institutes have been established in at least 10 jurisdictions, including major players such as the United Kingdom, United States, Japan, and Singapore. Many of these are government-mandated offices, signifying strong state-level commitment to AI governance and safety infrastructure.

I emphasize the importance of the Philippines participating in or partnering with such initiatives. These institutes can serve as models for building our own localized AI safety ecosystem, guided by our values of digital inclusion, human dignity, and countryside innovation. Establishing or contributing to such institutions will empower our researchers, youth, and policymakers to engage with global standards while crafting AI systems that uplift and protect Filipino communities.

CONVERGENCE OF ETHICAL AI PRINCIPLES

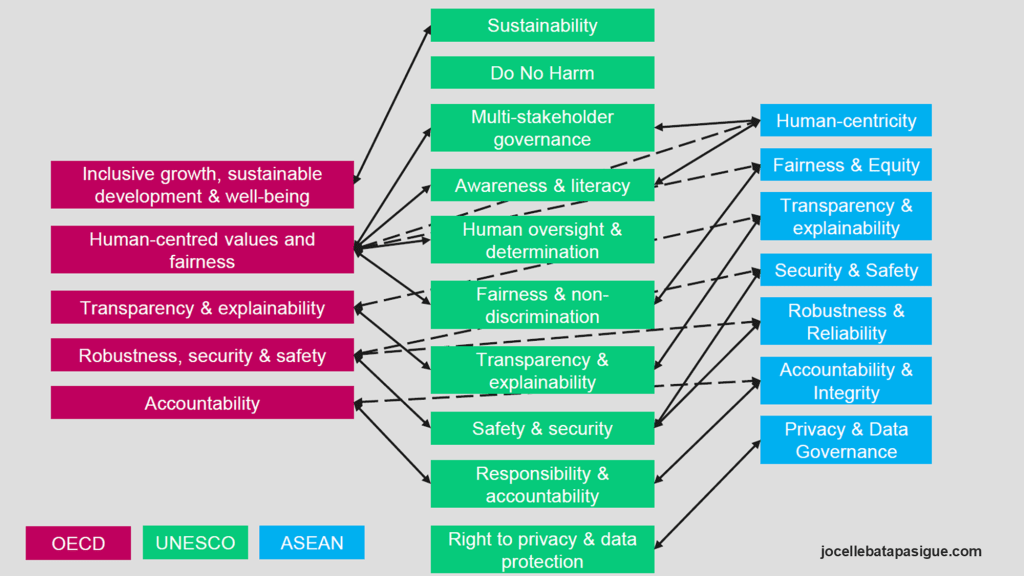

It is important for leaders to see the convergence of ethical AI principles across three major global frameworks—OECD (red), UNESCO (green), and ASEAN (blue)—emphasizing how diverse international efforts align around common values for trustworthy and safe artificial intelligence.

At the core of this diagram is the recognition that, despite geographic and institutional differences, all three frameworks advocate for human-centered, transparent, secure, and accountable AI. For instance:

- The OECD’s principle of “inclusive growth, sustainable development & well-being” maps directly to UNESCO’s sustainability and do no harm, and ASEAN’s human-centricity.

- “Human-centred values and fairness” from OECD aligns with UNESCO’s focus on fairness & non-discrimination and human oversight, as well as ASEAN’s fairness & equity.

- Transparency & explainability, robustness, security & safety, and accountability appear across all three frameworks, demonstrating universal agreement on core AI ethics dimensions.

- UNESCO’s right to privacy and data protection and ASEAN’s privacy & data governance reflect shared concern over safeguarding personal data in digital environments.

There is mutual reinforcement among frameworks, showing how they build upon one another to form a global ethical infrastructure for AI governance.

Indeed, global solidarity in AI ethics is possible and necessary. For the Philippines, this means we can confidently craft our own national AI governance roadmap—grounded in Filipino values and realities—while harmonizing with these global standards. This is key to empowering our youth, countryside innovators, educators, and policymakers, ensuring we don’t merely consume AI technology but actively shape it for inclusive, secure, and sustainable development.

PRIMARY POLICY FUNCTIONS OF AI STANDARDS?

AI standards can serve as essential tools to regulate, guide, and harmonize the responsible development and deployment of AI technologies across sectors and borders, through these way:

Supporting compliance with legal or regulatory requirements – AI standards provide concrete guidance to translate high-level principles into operational practice. By enabling conformity assessments, they help offer a presumption of compliance with existing regulations. This is crucial in jurisdictions with varying legal frameworks, offering a common reference point to navigate differing compliance landscapes.

Governing public sector procurement contracts – Standards act as a government enforcement tool, ensuring that public procurement aligns with ethical and technical requirements for AI. This use of standards as a lever for accountability helps enforce responsible practices and can generate positive downstream effects in the private sector by influencing market expectations around interoperability and access.

Facilitating international trade – Standards promote trust and quality assurance in AI-related products and services, essential for cross-border transactions. By serving as a common reference point, they reduce regulatory fragmentation, enabling countries to meet requirements under trade agreements like the WTO’s Technical Barriers to Trade Agreement. This enhances the global competitiveness of AI innovators, especially in developing economies like the Philippines.

Establishing AI standards is not merely a technical endeavor—it is a governance imperative. For the Philippines, adopting AI standards that reflect our national values and capacities ensures that our innovations are safe, export-ready, and aligned with global norms, while also protecting Filipino consumers and citizens. These standards serve as the bridge between ethical intent and enforceable action in building a digitally empowered and globally engaged nation.

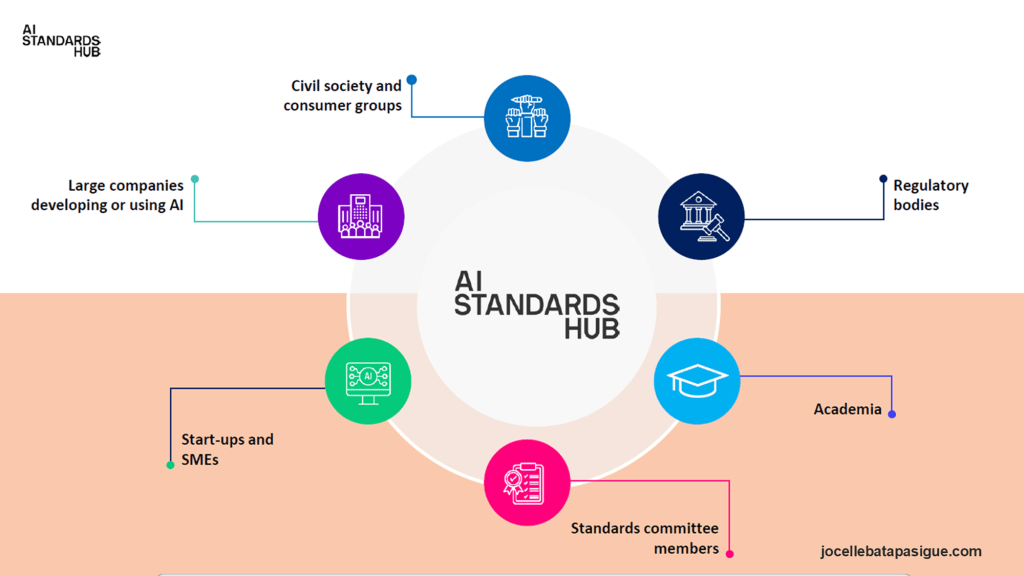

There is a need for a multi-stakeholder ecosystem supporting AI , highlighting the diverse groups that contribute to shaping, implementing, and overseeing AI standards. The diagram reinforces that developing robust, inclusive, and globally relevant AI standards requires collaboration across sectors, including government, industry, academia, and civil society.

One of the best examples is the AI Standards Hub, a centralized platform or initiative where knowledge, experience, and authority on AI standardization converge. It acts as a convening body and facilitator, ensuring inclusive participation in the standard-setting process.

Launched in October 2022, the AI Standards Hub is a collaborative effort led by the Alan Turing Institute, in partnership with the British Standards Institution (BSI) and the National Physical Laboratory (NPL). It is supported by the UK government’s Department for Science, Innovation and Technology (DSIT) and the Office for AI. The Hub aims to bring together stakeholders from industry, government, academia, and civil society to shape the development and adoption of AI standards. Surrounding the hub are seven key stakeholder groups:

- Civil society and consumer groups – These actors ensure that AI standards uphold public interest, protect rights, and reflect social values such as fairness, inclusivity, and accountability.

- Regulatory bodies – As the enforcers of law and policy, regulators play a critical role in aligning AI standards with national frameworks and ensuring their compatibility with legal mandates.

- Academia – Academic researchers provide evidence-based insights, technical rigor, and foresight into emerging trends, helping to ground standards in scientific credibility.

- Standards committee members – These individuals, often drawn from various sectors, draft, revise, and maintain technical standards, ensuring they are practical and implementable.

- Start-ups and SMEs – Smaller enterprises bring innovation and agility into the ecosystem. Their inclusion ensures that standards are accessible and supportive of digital entrepreneurship, especially in developing economies like the Philippines.

- Large companies developing or using AI – These entities often deploy AI at scale. Their participation ensures that standards are feasible, widely adopted, and scalable.

- AI innovators and ecosystem builders – Represented here as part of the start-ups/SMEs and large companies, these groups help translate AI standards into real-world applications that drive economic and social development.

Inclusivity of this standards ecosystem is essential for digital democracy and ethical governance. In the Philippines, building such a multi-stakeholder hub would empower local innovation while safeguarding national interests and ensuring AI technologies reflect our shared values of fairness, integrity, and resilience. This aligns with our call to strengthen public-private-academic partnerships in building a digitally inclusive and future-ready nation.

ROLE OF REGULATION IN SHAPING A TRUSTWORTHY AND SUSTAINABLE AI ECOSYSTEM

Regulation is not just about restriction—it is a powerful enabler of innovation when crafted wisely and inclusively. It establishes the rules of the game, creating an environment of regulatory certainty that encourages responsible AI development and adoption. For AI developers and adopters, clarity in regulation reduces ambiguity, supports investment decisions, and fosters innovation within safe and ethical boundaries.

Regulation also serves as a foundation for public trust, societal acceptance, and consumer confidence. When citizens see that AI is governed by fair, transparent, and enforceable rules, they are more likely to embrace AI-driven services and products. This trust is essential for inclusive digital transformation, especially in developing nations like the Philippines, where skepticism about technology can hinder progress.

Most importantly, regulation must strive to balance the immense opportunities of AI—in healthcare, education, jobs, and governance—with its potential risks, such as bias, privacy violations, or job displacement. This balance must be context-specific, guided by local realities, values, and development priorities. We must ensure that AI regulation supports human dignity, equity, and empowerment, particularly for underrepresented communities in the countryside. This way, we shape a future where AI serves all, not just the few.

AI regulation does not exist in isolation, but rather intersects with a broader legal and regulatory landscape. It recognizes that existing laws—such as criminal and civil statutes, cross-sectoral rules (e.g., data protection, consumer rights), and sector-specific regulations (like those for health, finance, or education)—already have implications for AI systems. Thus, policymakers must not assume that AI is entirely novel territory but instead assess how current legal frameworks apply to AI use cases.

However, because AI introduces new challenges and risks—such as autonomous decision-making, algorithmic bias, and accountability gaps—there may be a need to clarify how existing regulations apply. For example, how does liability in civil law translate when an AI makes a harmful decision? If current laws fail to provide adequate safeguards, new AI-specific regulations might be necessary to fill those gaps.

Most critically, there must be coherence between existing and new legal frameworks. This coherence ensures that emerging AI policies do not conflict with established legal principles, thereby fostering regulatory certainty, reducing compliance burdens, and enabling balanced innovation and protection. I emphasize that this is especially crucial in the Philippine context, where regulatory clarity will be key to promoting inclusive and ethical AI adoption, particularly for SMEs and countryside communities.

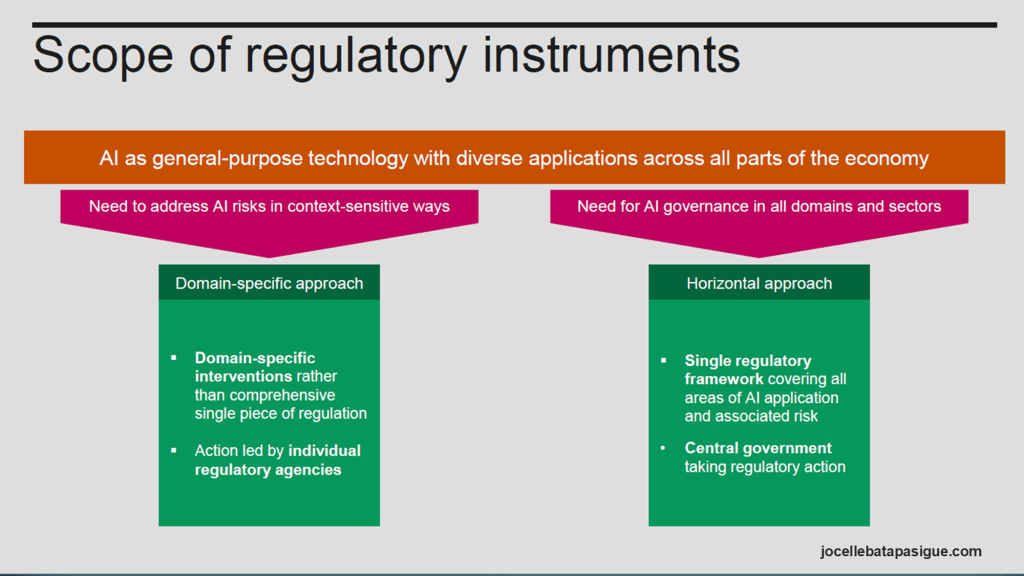

The scope of regulatory instruments for AI emphasizes the need to tailor governance approaches due to the nature of AI as a general-purpose technology with applications across all sectors of the economy. Given this wide-ranging impact, there is a dual imperative: to manage AI risks in context-sensitive ways and to ensure governance across all domains and sectors.

TWO MAIN REGULATORY APPROACHES

Domain-Specific Approach:This strategy involves targeted interventions that focus on specific sectors (e.g., healthcare, education, transportation), recognizing that different applications of AI pose different types of risks. Regulation is led by individual agencies, each with sectoral expertise, ensuring that interventions are relevant and precise.

Horizontal Approach: This approach proposes a single, overarching regulatory framework that spans all sectors and types of AI applications. The central government takes the lead in implementing this unified framework, aiming for consistency, interoperability, and wide applicability.

Domain-specific approaches tailor regulations to particular sectors like healthcare, finance, or education, allowing more precise governance. Horizontal approaches apply uniformly across sectors, aiming for consistency in governance across the entire AI landscape.

We also need to understand soft and hard measures in AI governance, mapped across domain-specific and horizontal approaches in the spectrum of regulatory tools available to policymakers and stakeholders.

Soft measures include non-binding tools such as guidelines, codes of conduct, voluntary standards, and principles. These are flexible and often adopted more quickly but may lack enforcement. Hard measures involve legally binding regulations, enforceable laws, and strict compliance mechanisms with penalties for non-compliance. These provide clarity and accountability but may take longer to implement and adapt.

I highlight the need for a hybrid approach in the Philippine context. Regulation must be contextualized to the realities of rural and urban communities, empowering local innovation while maintaining national alignment. This ensures AI adoption is both safe and empowering, especially for micro, small, and medium enterprises (MSMEs) and marginalized sectors.

This framework invites policymakers—especially in the Philippines—to consider hybrid strategies that blend contextual flexibility with structured oversight. For instance, countryside innovation initiatives may begin with soft, domain-specific measures to foster growth and experimentation, while gradually building toward hard, horizontal policies to ensure accountability and protection at scale.

Let us try to contextualize the soft-to-hard and domain-specific-to-horizontal regulatory framework by applying it to AI use in recruitment in the Philippines. It visualizes four illustrative governance strategies, each plotted on a two-axis system, and marked with the Philippine flag to reflect relevant national implications.

- Top-Left (Hard + Domain-Specific): This quadrant shows legal reforms in labor and employment law, specifically tailored to AI-driven recruitment. Such regulation could mandate additional protections for job applicants and new compliance requirements for employers using AI tools in hiring, ensuring accountability and fairness within that sector.

- Top-Center (Hard + General): Here, the focus is on AI-specific mandates that impose bias mitigation and transparency requirements within recruitment processes. This reflects a move toward codified safeguards to protect applicants against opaque or discriminatory AI decisions.

- Top-Right (Hard + Horizontal): This segment advocates for cross-sectoral legislation that upholds individual data rights, including informed consent and the right to refuse AI processing of personal data. This form of governance cuts across all sectors, embedding data privacy and autonomy as foundational protections in the digital age.

- Bottom-Left (Soft + Domain-Specific): This area suggests voluntary guidance for recruiters and vendors of AI hiring tools, issued by sector-specific regulators. It encourages responsible AI use without enforcing strict compliance—ideal for fostering innovation while building awareness.

- Bottom-Right (Soft + Horizontal): Lastly, this quadrant includes voluntary standards to mitigate AI bias and enhance transparency across different industries. It provides a flexible framework for companies to self-regulate while promoting ethical practices.

In the spirit of inclusive and ethical technology advocacy, this framework reinforces governance models that protect dignity, foster trust, and ensure human-centric innovation, especially in critical livelihood domains like employment. Such balanced regulatory pathways are essential to promote digital justice and economic opportunity for Philippines.

As we approach the milestone year of 2025, the completion of a comprehensive AI governance strategy is not merely a bureaucratic goal—it is a national imperative. In a time when artificial intelligence is rapidly reshaping economies, institutions, and daily life, the Philippines must act with both urgency and unity. Our governance framework must reflect our core values: inclusivity, fairness, innovation, and respect for human dignity. It must empower communities, protect rights, and guide our industries toward responsible AI adoption. By finalizing and operationalizing our AI governance strategy in 2025, we affirm our commitment to building a future where technology serves all Filipinos—uplifting every region, nurturing every talent, and securing our place in the global digital landscape with integrity and purpose.

Leave a comment